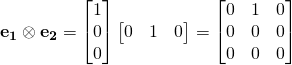

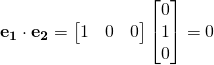

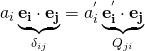

We already know:

(1) ![]()

Similarly, we need to be able to express a higher order matrix, using tensor notation:

(2) ![]()

![]() is sometimes written:

is sometimes written: ![]() , where “

, where “![]() ” denotes the “dyadic” or “tensor” product

” denotes the “dyadic” or “tensor” product

e.x.

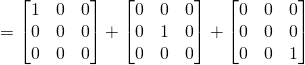

As opposed to:

![]() sum of 3 x 3 matrices = 3 x 3 matrix

sum of 3 x 3 matrices = 3 x 3 matrix

![]()

![]()

![]()

Recall eq. 3 in Section 1: Tensor Notation, which states that ![]() , where

, where ![]() is a 3×3 matrix,

is a 3×3 matrix, ![]() is a vector, and

is a vector, and ![]() is the solution to the product between

is the solution to the product between ![]() and

and ![]() . To see why this is so, we can consider the product between

. To see why this is so, we can consider the product between ![]() and the basis vector

and the basis vector ![]() , and then consider the product between

, and then consider the product between ![]() and an arbitrary vector

and an arbitrary vector ![]() . Before we do this, it should be mentioned that products between tensors and vectors (producing a vector) as well as products between two tensors (producing a tensor) will directly make use of the “dot product,” even though the “dot product” operator is sometimes thought of as a “scalar product.” The “dot product” treatment used consistently throughout this text, although mathematically a bit “sloppy,” enables one to use index notation to derive quantities in a very direct manner.

. Before we do this, it should be mentioned that products between tensors and vectors (producing a vector) as well as products between two tensors (producing a tensor) will directly make use of the “dot product,” even though the “dot product” operator is sometimes thought of as a “scalar product.” The “dot product” treatment used consistently throughout this text, although mathematically a bit “sloppy,” enables one to use index notation to derive quantities in a very direct manner.

The “dot product” will be used in this text to signify the tensor product between a tensor and a vector or the tensor product between two tensors. This is consistent with most of the literature in solid mechanics. A small minority of authors, however, consider this to be “sloppy” math and insist, instead, that the dot product between tensors produce a scalar value. This distinction is very important since tensor products, which produce tensors, are prevalent in solid mechanics and this text will heavily use the “dot” convention when deriving important identities and quantities. Authors that use a different convention would use a very different approach for derivation, in particular where index notation is used.

(3) ![]()

Note the use of the Kronecker delta in simplifying the expression in eq. 3.

(4) ![]()

Again, the solution is a vector; this time with components ![]() , as expected (eq. 3 in Section 1: Tensor Notation).

, as expected (eq. 3 in Section 1: Tensor Notation).

note: Eq. 4 is standard matrix multiplication. We had to use a “dot” product to get the tensor algebra to work as a typical matrix – vector multiplication (we’ll see later that

note: Remember, in the above derivations, only if

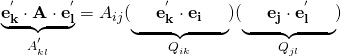

So, consider eq. 3. Let’s “pick out” a particular component of this tensor, as follows:

Multiplying both sides by ![]()

![]()

![]()

or, re-written:

(5) ![]()

note: The LHS of eq. 5 is only one term. I.e. this is how you pick out a single term of a matrix.

note: For

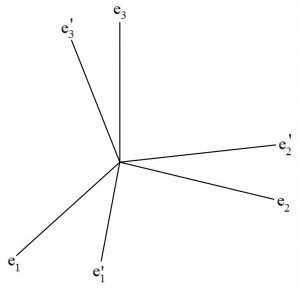

Consider the following coordinate system (see Figure):

![]() ;

; ![]()

we know: ![]()

![]() (terms [components] of a rotation matrix)

(terms [components] of a rotation matrix)

To be more useful, we need to show that ![]() .

.

By definition, ![]()

Multiply both sides by ![]() :

:

And, since ![]() (this should be obvious):

(this should be obvious):

(6) ![]()

note:

![]() is an “orthogonal” tensor. A particular identity of an orthogonal tensor can be written as follows:

is an “orthogonal” tensor. A particular identity of an orthogonal tensor can be written as follows:

(7) ![]()

For an orthogonal tensor, ![]() (obeys eq. 7), it can be shown that

(obeys eq. 7), it can be shown that ![]() (pf can be found in [Asaro])

(pf can be found in [Asaro])

![]()

![]()

where ![]()

![]()

From eq. 6, we know that ![]()

![]()

![]()

![]()

(8) ![]()

In eq. 8, ![]()

(assuming we know the unit vectors defining the original and rotated coordinate systems).

What about transforming a tensor?

![]()

![]()

The following will be simply stated, and then proved:

(9) ![]()

Tensor product practice and quick proof of eq. 9:

![]()

Now taking ![]() to be

to be ![]() , we find:

, we find:

![]()

Thus, ![]()

Setting ![]() , we can see that the components are

, we can see that the components are ![]() – i.e. the desired result (eq. 9)

– i.e. the desired result (eq. 9)

note: We put a “dot” to get the tensor algebra to work. It’s really just a standard matrix product. If ever a product is written

- R. J. Asaro and V. A. Lubarda, Mechanics of Solids and Materials, Cambridge, UK: Cambridge University Press, 2006.

[Bibtex]@BOOK{Asaro, Address = {Cambridge, UK}, Author = {Robert J. Asaro and Vlado A. Lubarda}, Edition = {}, Publisher = {Cambridge University Press}, Title = {Mechanics of Solids and Materials}, Year = {2006} }