Throughout this text, we will be working in 3D. The way that the deformations of a body are described will make use of three-component tensors (i.e. vectors) that describe magnitude and direction. We require a vector that has only three components, because we will consider that as a body deforms, any point within the body undergoes a motion that is described by a simple translation, in 3D space. Once we begin looking at strains (and stresses), then we will have to consider the motion of elements, rather than points. Since elements can deform axially in three directions as well as undergo shear, the description of stresses and strains will require the use of higher order tensors (i.e. matrices).

“Tensor” notation (index notation) is essentially a way of keeping track of the components of matrices when performing matrix operations or algebra, without the need to repeatedly draw matrices. In this way, tensors save paper! In addition to this benefit, tensor notation (index notation) lends itself nicely to computer programming. Having said that, the majority of derivations in the later chapters will involve algebra that the reader is probably already familiar with. For example, the basic product between an nx1 vector and a 1xn vector produces an ![]() matrix, while a dot product between the same two vectors would produce a scalar. Similarly, matrix products (denoted with a “

matrix, while a dot product between the same two vectors would produce a scalar. Similarly, matrix products (denoted with a “![]() ” for reasons that are explained in this chapter), inverses, transposes, and other operations are probably already familiar as well. Basic properties of the operations (e.x. matrix multiplication is associative but not commutative) are also familiar. Thus, it is possible to jump right into the later chapters without studying tensors and only risk becoming “stuck” on the few derivations that operate explicitly on components of matrices.

” for reasons that are explained in this chapter), inverses, transposes, and other operations are probably already familiar as well. Basic properties of the operations (e.x. matrix multiplication is associative but not commutative) are also familiar. Thus, it is possible to jump right into the later chapters without studying tensors and only risk becoming “stuck” on the few derivations that operate explicitly on components of matrices.

Tensors (matrices and vectors) will be written in ![]() . When their components are of interest, alphabetical subscripts (“indices”) will be used, and the boldface removed, since each component of a tensor is merely a scalar. Most vectors will be written as lower-case English letters, while most higher-order tensors will be written using upper-case English letters or Greek letters. Only rectangular (Cartesian) coordinate systems will be considered.

. When their components are of interest, alphabetical subscripts (“indices”) will be used, and the boldface removed, since each component of a tensor is merely a scalar. Most vectors will be written as lower-case English letters, while most higher-order tensors will be written using upper-case English letters or Greek letters. Only rectangular (Cartesian) coordinate systems will be considered.

So, how does tensor math work and in particular, what is “index notation?” Further, what specific tensor operations are going to be most important for us? These are the questions that will be addressed in this chapter.

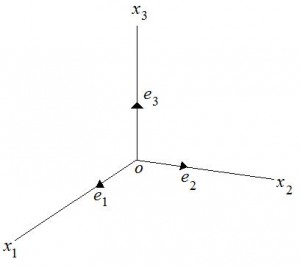

Consider a typical rectangular coordinate system defined by the unit vectors ![]() ,

, ![]() ,

, ![]() (see Figure):

(see Figure):

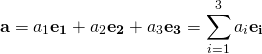

We know that any vector, ![]() , with components

, with components ![]() ,

, ![]() ,

, ![]() , can be defined by the units vectors

, can be defined by the units vectors ![]() ,

, ![]() ,

, ![]() , as follows:

, as follows:

(1)

Our convention (sometimes called the “Einstein Summation Convention”) will be to simply drop the ![]() symbol. The subscript (or “index”) will be assumed to be summed. This is one of the important ideas of “tensor notation” (or “index notation”).

symbol. The subscript (or “index”) will be assumed to be summed. This is one of the important ideas of “tensor notation” (or “index notation”).

note:

The dot product between two vectors can be written in index notation:

(2) ![]()

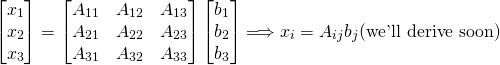

We know that the multiplication of a matrix and a vector results in a vector. The components of this vector can be expressed as follows:

(3)

In eq. 3, we are summing over “![]() ” only, because the practice is to only sum over indices that are “repeated” within a given term in an expression. Another way to think about it, which will always work in this text unless otherwise stated, is to consider that “repeated indices” appear on only one side of the equation, which indicates that they should be summed. The “free index,” which in eq. 3 is “

” only, because the practice is to only sum over indices that are “repeated” within a given term in an expression. Another way to think about it, which will always work in this text unless otherwise stated, is to consider that “repeated indices” appear on only one side of the equation, which indicates that they should be summed. The “free index,” which in eq. 3 is “![]() ,” appears on both the left-hand side and the right-hand side of the equation (it also only appears one time in any given term) and therefore we know not to sum over “

,” appears on both the left-hand side and the right-hand side of the equation (it also only appears one time in any given term) and therefore we know not to sum over “![]() .”

.”

Alternatively, consider:

![]()

![]()

![]() =scalar

=scalar

note: In eq. 3, “